AI-powered intrusion detection systems (IDS) use machine learning to monitor networks, identify threats, and respond to breaches faster than older, rule-based systems. They analyze behavior patterns, detect anomalies, and adapt to evolving threats, offering businesses better security against cyberattacks like zero-day exploits and insider threats. Key benefits include:

- Higher Detection Accuracy: AI systems identify 99.9% of threats, including unknown attack methods.

- Reduced Costs and Faster Response: AI can save businesses $2.2 million per breach and reduce response time by nearly 100 days.

- Integration Capabilities: Modern AI IDS connects with cloud platforms, physical security systems, and existing tools like SIEM and SOAR.

To implement AI IDS effectively:

- Assess Security Needs: Identify critical assets, vulnerabilities, and compliance requirements (e.g., FISMA, NIST standards).

- Choose a Deployment Model: Options include network-based, host-based, cloud-native, or hybrid systems.

- Minimize False Positives: Use AI’s context-aware analysis to reduce alert fatigue.

- Plan for Maintenance: Regularly update models, monitor for drift, and retrain with new data.

A balanced approach combining AI automation and human oversight ensures better security outcomes while addressing challenges like model errors and compliance risks.

Core Components of AI Intrusion Detection

Key Features of AI IDS

An AI-based Intrusion Detection System (IDS) typically includes a data ingestor, scorer, behavioral analytics engine, alert manager, and automated response system. Together, these components work to identify threats with impressive speed and precision.

The data ingestor is responsible for collecting raw data from sources like network traffic, system logs, and user activity. It then normalizes this data for analysis – a process that can take as little as 10 minutes for a new data source. After this, the scorer, powered by a lightweight neural network, processes the data to determine the probability of malicious activity.

The behavioral analytics engine plays a critical role by defining what "normal" behavior looks like for systems and users. This allows it to flag deviations that could indicate insider threats or unknown attack methods. The alert manager then steps in to correlate and prioritize alerts, condensing potentially hundreds of notifications into a manageable list of genuine threats – accomplishing in minutes what might otherwise take analysts days. Finally, the automated response system takes swift action, such as isolating compromised devices or blocking suspicious IP addresses, executing these measures in seconds.

"AI-powered security monitoring replaces a set of static rules with a learning system that adapts to your network."

– Adolfo Usier, Author

Some systems also incorporate generative AI, which offers plain-language explanations of alerts and detailed remediation steps for security teams, making it easier to act quickly and effectively.

Deployment Models for Businesses

Choosing the right deployment model depends on your business’s size, infrastructure, and security needs:

- Network-based IDS: Monitors traffic across the entire network, providing centralized oversight.

- Host-based IDS: Focuses on individual devices, analyzing logs and system calls to protect critical endpoints.

- Cloud-native IDS: Designed for cloud environments, it integrates with tools like AWS CloudWatch or Azure Monitor to handle large-scale, high-dimensional data.

- Hybrid IDS: Combines on-premises and cloud-based monitoring, making it ideal for businesses operating in both environments. This approach excels at detecting coordinated attacks across multiple platforms.

Reducing False Positives and Improving Accuracy

AI-based IDS systems shine in reducing false positives, a common challenge in traditional security setups. By analyzing context – such as the time of activity, user behavior, or the sequence of system calls – AI can distinguish between legitimate actions and potential threats, going beyond simple pattern matching.

Take BrightCo as an example. In October 2025, this 50-device office network implemented AI-based monitoring using a lightweight neural network. Before the upgrade, their rule-based firewall generated 3–4 false alarms daily. After deploying the AI scorer, they reduced their alert volume by 70%, while successfully identifying a credential-stealing script that attempted to exfiltrate data – all for less than $10 per month in cloud credits on a single server.

One of the standout advantages of AI is its ability to learn continuously. By analyzing both historical and real-time data, AI models adapt to new threat patterns, becoming more accurate over time. Unlike traditional systems that rely on static signatures requiring manual updates, AI evolves automatically, equipping it to detect zero-day attacks and novel tactics. Additionally, alert correlation groups related notifications into a single "attack-level" finding, allowing security teams to focus on genuine issues rather than sifting through redundant alerts.

"Machine learning is a computer model that you can train to recognize things. This requires an enormous amount of training data. The more and the better the data you give the system, the better it can ultimately learn and perform."

– Tijmen Mulder, Incident Response Lead, Eye Security

sbb-itb-643e28e

Implementing AI for Intrusion Detection Systems | Exclusive Lesson

Designing an AI Intrusion Detection Strategy

AI vs Traditional Intrusion Detection Systems Comparison

When it comes to protecting your business with AI, crafting a strong intrusion detection strategy is crucial. Let’s break down how to approach this step-by-step.

Assessing Business Security Needs

The first step is understanding what you need to protect. Start by identifying your critical assets and vulnerabilities. To do this, create an AI Bill of Materials (AI-BOM) – a detailed inventory of all your AI models, datasets, pipelines, and dependencies like GPUs, cloud services, and APIs. This will help you map out your attack surface and prioritize areas that need protection.

AI-specific threats require a different lens. Use frameworks like MITRE ATLAS and OWASP Top 10 for LLMs to assess risks unique to AI, such as model poisoning, prompt injection, or adversarial examples. Traditional methods alone won’t catch these. And the stakes? They’re high. Phishing attacks have surged by 1,265% due to generative AI, and 40% of all email threats now leverage AI.

Another critical area is ensuring data security. Track data lineage to avoid intellectual property issues, set clear boundaries for AI workload access, and define risk tolerance. For example, you might establish a policy like “no PII in model outputs.” Align these efforts with regulations such as the EU AI Act, U.S. executive orders, or ISO/IEC 42001.

Finally, determine which tasks should remain human-led, which can be AI-assisted, and which can be fully automated. This balance ensures accountability while leveraging AI effectively.

Once you’ve identified vulnerabilities and attack surfaces, it’s time to outline your operational requirements for an AI intrusion detection system (AI IDS).

Gathering Requirements for AI IDS

To design your AI IDS, start by mapping out data flows. Identify where exposure points exist and how the system can integrate with your existing tools like SIEM, SOAR, and EDR platforms, as well as electronic lock systems. With over 70% of organizations now using managed AI services, ensuring compatibility with cloud platforms is often essential.

"AI can process massive amounts of data at lightning speed, identifying hidden threats that traditional security measures might overlook."

– Qualys

Decide whether you’re focusing on IT (enterprise) or OT (operational technology) environments. OT systems, in particular, demand strict oversight, as they control physical infrastructure where safety is paramount. For example, CISA’s 2025 principles emphasize that AI should never make safety-critical decisions in OT environments. You’ll also need to evaluate whether your team has the resources to handle ongoing tasks like model retraining, monitoring drift, and triaging alerts.

If you’re running AI locally, make sure your hardware meets the minimum requirements. As of 2025, this includes an Intel i7 or AMD Ryzen 7 CPU, 16GB RAM (32GB recommended), and an NVIDIA RTX 3060 GPU or better.

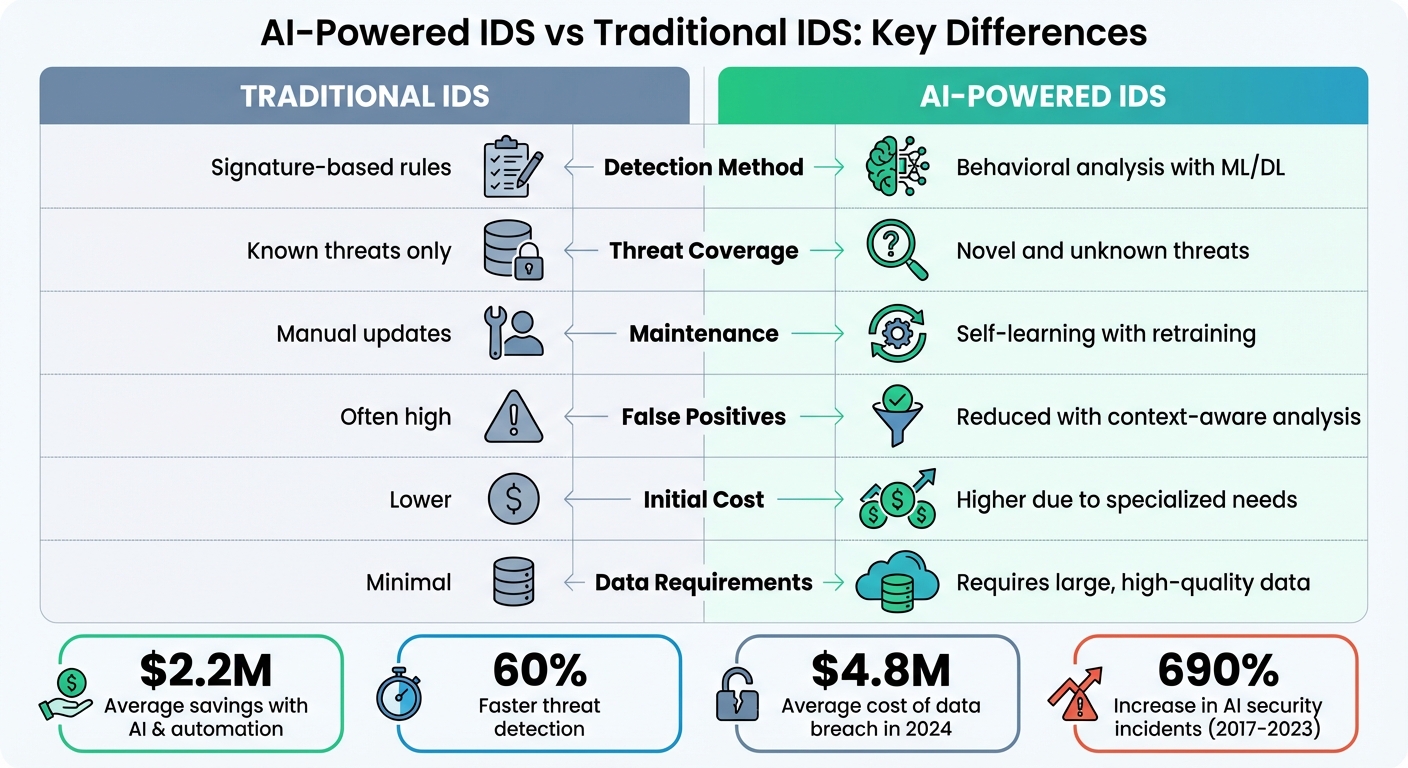

Comparing AI and Traditional IDS

Understanding the differences between traditional and AI-powered intrusion detection systems can help you set realistic goals and allocate resources wisely. Here’s a quick comparison:

| Feature | Traditional IDS | AI-Powered IDS |

|---|---|---|

| Detection Method | Signature-based rules | Behavioral analysis with ML/DL |

| Threat Coverage | Known threats only | Novel and unknown threats |

| Maintenance | Manual updates | Self-learning with retraining |

| False Positives | Often high | Reduced with context-aware analysis |

| Initial Cost | Lower | Higher due to specialized needs |

| Data Requirements | Minimal | Requires large, high-quality data |

The financial impact of choosing AI is clear. Companies using AI and automation in cybersecurity save an average of $2.2 million compared to those relying on traditional methods. Meanwhile, the average cost of a data breach hit $4.8 million in 2024. AI-powered systems also detect threats up to 60% faster and offer broader coverage, but they come with their own challenges. For instance, AI security incidents rose by 690% between 2017 and 2023, with 73% of enterprises experiencing at least one AI-related security event in a single year.

"AI isn’t replacing analysts; it’s reshaping their roles. The key is assigning tasks appropriately. Let AI assist with triage, enrichment, or prioritization while humans supervise."

– Nick Hunter, Principal Technical Product Marketing Manager, Cisco/Splunk

Before investing in new tools, take a close look at your existing SIEM and SOAR platforms. Many already include AI-driven features for clustering alerts and prioritizing threats, which might be underutilized. A hybrid approach – combining signature-based detection for known threats with AI models for detecting new ones – often provides the best results.

Implementing AI Intrusion Detection Systems

Deployment Roadmap

Start by reviewing your existing security tools to uncover any unused AI features. Many SIEM and SOAR platforms already come equipped with AI capabilities for tasks like alert clustering and prioritizing risks. This review helps clarify the specific problem your AI intrusion detection system (IDS) will address – whether that’s cutting down triage time, improving detection accuracy, or managing alert overload. It also identifies the primary users, such as your SOC analysts.

Next, conduct a risk assessment using frameworks like MITRE ATLAS or OWASP’s generative AI risk inventories to identify vulnerabilities unique to AI systems. This step complements your usual security evaluations with an AI-specific focus.

Begin with a pilot program targeting low-risk, high-volume tasks, such as alert clustering or ticket enrichment. This approach allows you to demonstrate the system’s value while minimizing potential disruptions to critical operations. Once the pilot proves effective, move to full deployment by integrating your AI IDS into broader threat detection, investigation, and response (TDIR) workflows. The system should draw data from across your network, including endpoints, cloud platforms, and identity systems.

It’s essential to establish feedback loops where analysts can flag and correct AI errors. These corrections help fine-tune the system over time and build trust among your team.

"Trust grows when systems are accountable. Analysts are more likely to embrace AI if they know it can be monitored, corrected, and improved."

– Nick Hunter, Principal Technical Product Marketing Manager, Cisco/Splunk

| Deployment Phase | Key Activities | Tools/Frameworks |

|---|---|---|

| Assessment | Audit embedded AI, define success metrics | SIEM/SOAR Audit, Security Copilot |

| Risk Discovery | Threat modeling, data risk assessment | MITRE ATLAS, OWASP, Microsoft Purview |

| Pilot | Low-risk task automation (alert clustering) | Embedded AI features |

| Integration | Connect SIEM, SOAR, and EDR | Microsoft Sentinel, Defender XDR |

| Governance | Log activity, monitor for prompt injection | RBAC, Audit Logs |

These steps create a clear path for integrating AI IDS into your existing security environment.

Integrating with Existing Systems

Once you’ve followed the deployment roadmap, focus on integration. This often involves using unified security portals to consolidate data from multiple sources. For example, you can merge SIEM data into XDR platforms like Microsoft Defender, creating a single, comprehensive view of alerts, vulnerabilities, and other security data. Specialized data connectors can help transfer service data from XDR and cloud platforms to your central SIEM.

Adopt Zero Trust principles for integration: verify through automated analysis, limit AI workloads to the least privilege necessary, and continuously scan for vulnerabilities. Clearly map out which tasks will be managed by humans, supported by AI, or fully automated, ensuring no gaps in your incident response process.

For secure authentication, use managed identities to connect AI services. This avoids the need to store credentials and reduces the risk of unauthorized access. Additionally, virtual networks and private links can help isolate AI applications at the network level, preventing lateral movement in case of a breach.

When integrating physical security systems – such as access control or surveillance – consider linking device risk signals to conditional access protocols. For example, compromised hardware can be automatically isolated to prevent further damage. Businesses that also use physical security services, like those offered by Sherlock’s Locksmith, can enhance their overall defense by coordinating digital and physical intrusion detection systems.

Define clear "rules of engagement" for AI actions. Specify which tasks the AI can handle autonomously, such as clustering alerts, and which require human approval, like isolating critical devices or blocking network traffic.

U.S. Business Considerations

After deployment and integration, U.S. organizations must align with federal cybersecurity standards. For instance, follow NIST SP 800-94 guidelines for designing and maintaining intrusion detection systems. Businesses working with federal agencies must also comply with the Federal Information Security Management Act (FISMA) and relevant OMB Circulars.

Complement these traditional frameworks with the NIST AI-RMF and CISA’s Secure by Design principles, which emphasize accountability and transparency in AI security practices.

Another important factor is data residency. Ensure sensitive logs used for AI training or operations stay within approved geographic boundaries and comply with your organization’s data handling policies. Tools like Microsoft Purview allow you to classify data with sensitivity labels and enforce access controls.

Lastly, maintain a comprehensive inventory of AI assets. Use tools like Azure Resource Graph Explorer to track all AI workloads – both generative and non-generative – across your environment. This ensures you stay aware of the AI systems operating within your network.

Maintaining and Optimizing AI Intrusion Detection

Daily Operations and Alert Management

Once your AI Intrusion Detection System (IDS) is up and running, consistent daily management is crucial to ensure it performs effectively. Start by establishing a daily workflow to handle incident triage, confirm data connectors are functioning, and verify that automated playbooks are executing as intended. AI can streamline this process by consolidating duplicate alerts and filtering out benign findings, allowing Security Operations Center (SOC) analysts to concentrate on real threats. Keep an eye on key metrics like True Positive Rate (TPR) and False Positive Rate (FPR) to monitor the system’s accuracy. Additionally, set up feedback loops so analysts can flag errors and provide corrections to improve the model over time. These practices help maintain the security framework you built during deployment.

"AI isn’t replacing analysts; it’s reshaping their roles. The key is assigning tasks appropriately… AI does the sorting, humans make the decision."

– Nick Hunter, Principal Technical Product Marketing Manager, Cisco/Splunk

The benefits of integrating AI into cybersecurity are significant. Organizations utilizing AI and automation report average savings of $3.05 million and recover 74 workdays when addressing security incidents. Advanced teams even aim to contain threats within five minutes of detection.

Model Retraining and Updates

Beyond daily operations, your AI models need regular updates to keep pace with emerging threats. Monitor for "model drift", which occurs when the system’s accuracy declines due to changes in data or threats, and retrain the model promptly when needed. Feedback from daily alert reviews plays a key role in shaping retraining cycles.

Incorporate adversarial training every few months by adding challenging inputs – around 10–20% of the training data – to make your model more robust. Use tools like MLflow or Git for version control to track changes in code and datasets. Regular checkpoints during retraining ensure you can roll back if something goes wrong.

Stay proactive with weekly updates to analytics rules and monthly reviews of access permissions and data retention policies. Every quarter, bring together legal, security, and data science teams for a cross-functional review to adjust controls in response to new regulations or evolving threats. According to Gartner, 60% of AI projects may fail by 2026 without proper data management practices. To keep your models organized, maintain a "security passport" that records details like the threat model, training data inventory, validation results, and incident logs.

Validation and Governance

To ensure long-term reliability, rigorous validation and governance are essential. Regularly test your AI IDS through red-teaming exercises and adversarial simulations to uncover hidden weaknesses. Automated attack simulations can confirm whether detection rules work as intended and ensure seamless alert-to-response transitions using SOAR playbooks.

Protect your models by using cryptographic signing to verify that production models haven’t been altered or replaced. Maintain tamper-resistant forensic logs for at least 90 days to detect and analyze sophisticated attacks. Map detections to the MITRE ATT&CK framework to identify coverage gaps and prioritize alerts based on adversary tactics. Adopting Zero Trust principles – treating every interaction as untrusted until verified – adds another layer of security for data ingestion, training, and inference processes. Store detection rules in version-controlled repositories with CI/CD pipelines to ensure they’re reviewed regularly and to prevent alert fatigue.

When combined with daily operations and periodic updates, these governance measures create a comprehensive security lifecycle. Implementing AI Trust, Risk, and Security Management (TRiSM) controls is projected to reduce inaccurate or unauthorized outputs by at least 50% by 2026.

Best Practices for AI Intrusion Detection

Best Practices for Effective Use

Start by auditing your security tools to identify built-in AI features in platforms like SIEM and SOAR. These can help cut down on alert fatigue and focus on high-priority incidents. Once you know what you’re working with, take a phased approach: first establish policies (Govern), then test and audit (Manage), and finally implement technical controls (Secure). This step-by-step strategy avoids the pitfalls of expensive, rushed implementations.

Adopt a Zero Trust mindset – assume nothing is safe until verified. Every system action should require authentication and authorization, and continuous monitoring is a must. Assign AI to handle low-risk, high-volume tasks like grouping duplicate alerts or adding context to security tickets. As your confidence in the system grows, you can gradually expand its responsibilities.

Human oversight is non-negotiable. Clearly label each workflow step as "human-led", "AI-assisted", or "automated" to avoid over-reliance on AI. For low-risk tasks, establish feedback loops so analysts can flag errors and help refine the AI’s performance over time. To protect against prompt injection attacks, implement strict input validation and keep an eye out for data drift or repetitive patterns that might indicate a breach.

Safeguard your AI models by using cryptographic hashes and digital signatures to ensure they remain untampered. Store model weights securely in vaults or Hardware Security Modules (HSMs). Regular red-teaming exercises can help identify weak spots. Additionally, use Data Loss Prevention (DLP) tools to clearly define data boundaries and prevent the AI from leaking sensitive information.

While these practices strengthen your AI defenses, overlooking critical details can lead to common mistakes.

Common Mistakes to Avoid

Even with the right strategies, certain missteps can undermine your AI intrusion detection system (IDS). One major issue is failing to draw clear lines between AI tasks and human decisions. AI can handle sorting and providing context, but humans must make the final call on high-stakes actions. Without this balance, you risk acting on AI outputs that are confidently incorrect – also known as hallucinations.

"AI isn’t replacing analysts; it’s reshaping their roles. The key is assigning tasks appropriately… AI does the sorting, humans make the decision."

– Nick Hunter, Principal Technical Product Marketing Manager, Cisco/Splunk

Another mistake is ignoring Shadow AI, or unapproved tools that employees use without IT’s knowledge. These tools can expose sensitive data, with 80% of business leaders citing data leakage as their top AI concern. Automated scanning can help track and manage all AI tools in your environment.

Treating AI as a "black box" is another common error. If you can’t explain why the system flagged a threat, it becomes harder to trust its outputs. Make sure all decisions are logged and traceable so analysts can understand the reasoning behind them. Similarly, avoid giving AI agents excessive access to internal data. If compromised, they can quickly become a major liability.

Lastly, don’t overlook data governance. AI requires access to large datasets, but failing to enforce retention policies and access controls can lead to compliance issues. With 52% of leaders unsure how to navigate regulations like the EU AI Act, establishing clear governance frameworks is critical.

Benefits and Challenges

Balancing the pros and cons of AI intrusion detection is crucial for aligning it with your overall security goals.

| Feature | Benefits | Challenges |

|---|---|---|

| Detection Speed | Speeds up threat detection by analyzing massive datasets. | Requires significant computing power to secure model weights. |

| Operational Efficiency | Automates repetitive tasks like grouping alerts and adding context. | Risk of generating inaccurate outputs (hallucinations). |

| Threat Adaptation | Detects complex, evolving threats that traditional systems might miss. | Susceptible to attacks like prompt injection and data poisoning. |

| Scalability | Scales defenses to match growing attack surfaces. | Creates accountability challenges when AI operates without direct oversight. |

Conclusion

AI-driven intrusion detection has become a critical tool for addressing the ever-evolving landscape of security threats. Traditional methods simply can’t keep up with the complexity and speed of today’s expanding attack surfaces. By monitoring interconnected systems, AI bridges the gap between digital and physical security, identifying systemic risks that could potentially trigger widespread operational failures across a business’s infrastructure.

This shift represents a move toward a more proactive and integrated approach to defense. Consider this: 88% of organizations are concerned about malicious actors exploiting vulnerabilities in AI systems, and 80% of business leaders rank data leakage as their top worry. These challenges demand automated, real-time detection capabilities, as manual processes lack the speed needed to counter dynamic and fast-moving threats.

"AI is a game changer – but only if you can secure it." – Microsoft

This statement underscores the importance of a defense strategy that goes beyond reactive measures. A proactive approach means embedding security throughout the entire lifecycle of your systems, not just responding after an issue arises. AI not only enhances detection but also addresses the global shortage of cybersecurity professionals by automating repetitive tasks, summarizing alerts, and recommending actionable mitigations. This allows human analysts to focus on higher-level decision-making while AI handles the heavy lifting of sorting and identifying patterns.

However, successful implementation requires more than just deploying AI. Clear governance, consistent human oversight, and ongoing adjustments ensure that your defenses stay effective against threats that traditional systems often overlook. Whether you’re protecting digital assets, physical infrastructure, or both, AI-powered intrusion detection offers the speed, scalability, and flexibility that modern businesses need.

For organizations looking to strengthen their security posture, partnering with local experts like Sherlock’s Locksmith can add an extra layer of protection to your overall strategy.

FAQs

How does AI enhance the accuracy of intrusion detection compared to traditional systems?

AI-powered intrusion detection systems take accuracy to a new level by using machine learning and deep learning to study and recognize normal traffic patterns. This allows them to spot subtle irregularities and respond to evolving threats. As a result, they reduce false alarms while catching advanced attacks that older, signature-based systems might miss entirely.

What sets these systems apart is their ability to learn continuously from new data. Instead of relying on static rules, they adapt in real time to stay ahead of emerging cybersecurity risks. This makes them a more dependable and efficient way to protect businesses from both familiar and unexpected threats.

What should businesses consider when choosing an AI-powered intrusion detection system (IDS) deployment model?

When choosing an AI-powered intrusion detection system (IDS), the first step is deciding where the system will operate – on-premises, in the cloud, or through a hybrid setup. Each option has its advantages and trade-offs. On-premises solutions give businesses greater control over sensitive data and help meet strict compliance standards. However, they come with higher upfront costs and ongoing maintenance demands. Cloud-based systems, on the other hand, are more scalable and budget-friendly but can raise concerns about data privacy and reliance on third-party vendors. A hybrid approach offers a middle ground, keeping critical data on-site while using the cloud for advanced analytics. That said, hybrid setups require careful planning to ensure smooth coordination.

Next, consider the resources and expertise required to implement and maintain the IDS. AI-driven systems can be resource-heavy, making cloud-based options appealing for smaller teams with limited infrastructure. It’s also essential to assess how well the IDS integrates with your existing security tools, how frequently it updates to tackle emerging threats, and whether it offers transparency in its decision-making process. Lastly, evaluate the total cost of ownership – not just the licensing fees but also expenses like staffing and potential risks. This ensures the system aligns with your organization’s goals and compliance requirements.

How can businesses stay compliant and ensure proper governance when using AI-powered intrusion detection systems?

To maintain compliance and proper governance with AI-powered intrusion detection systems (IDS), businesses should adopt a risk-based framework that prioritizes accountability and transparency. Start by defining clear ownership of the system, documenting every stage of the AI model’s lifecycle, and putting controls in place to monitor its ongoing operation. Using established resources like the NIST AI Risk Management Framework or MITRE ATLAS can provide a solid foundation for standardizing processes related to data management, model validation, and audits.

From an operational perspective, treat the AI-IDS as a key component of your security strategy. Regular audits are essential, as is encrypting data both during transfer and while stored. Keeping an eye on model drift will ensure the system continues to perform effectively over time. Governance measures should also include role-based access controls, immutable logs of system activities, and a well-defined incident response plan that addresses risks unique to AI systems. Bringing in third-party assessments on a regular basis and staying updated on regulations will help maintain compliance while ensuring the IDS remains a reliable tool for detecting threats.